🕒 Estimated time: 10–15 minutes

🧠 What You'll Learn

This chapter introduces the three foundational capability types in the Model Context Protocol (MCP). These capabilities define how large language models (LLMs) interact with their environment. You’ll learn:

What Tools, Resources, and Prompts are

How they differ in behavior and purpose

When to use each in a real-world AI system

How to implement them using Python decorators

⚙️ MCP Capabilities Overview

In MCP, a capability is any function or data source that an LLM can interact with via a standardized protocol. Capabilities are modular, composable, and discoverable—much like Kubernetes objects. There are three types:

Tool A Tool is a callable function. It performs an action, such as fetching data, executing logic, or interacting with external systems. Tools are the active interface between the LLM and the world.

Resource A Resource is a read-only data source. It provides factual context that the LLM can use to inform its reasoning. Resources do not perform actions—they simply supply information.

Prompt A Prompt is a predefined message template. It guides the LLM’s behavior, tone, and task. Prompts are essential for structuring interactions and ensuring consistent output.

🛠️ Tools – Functions That Act

Tools are executable functions. When an LLM calls a tool, it’s not just generating text—it’s performing a task. This could be anything from querying a database to calling an external API.

Use tools when you need the AI to do something: fetch weather data, calculate a value, or trigger a workflow. Tools are dynamic and often produce side effects.

📚 Resources – Context That Informs

Resources are passive data providers. They don’t perform actions but serve as knowledge bases the LLM can consult. Resources enrich the model’s reasoning with structured, factual information.

Use resources when the AI needs access to static or dynamic data: a list of supported cities, user preferences, or a product catalog. Resources are read-only and safe to query.

🧾 Prompts – Templates That Guide

Prompts define how the LLM should behave. They’re structured message templates that shape tone, role, and task. Prompts are essential for consistency and composability in AI workflows.

Use prompts when you want to standardize behavior: instruct the AI to act as a travel assistant, summarize a document, or generate code. Prompts are declarative and reusable.

🧠 Example Scenario: Travel Agent AI

Imagine building a travel assistant powered by MCP. Each capability plays a role:

The Tool

get_flight_prices()fetches flight rates.The Resource

travel_preferences.jsonprovides user context.The Prompt “You are a travel assistant...” guides tone and behavior.

Together, these capabilities form a coherent, modular system that can reason, act, and communicate effectively.

✅ Hands-On: Define One of Each Capability

Let’s implement each capability type using a simple weather service example. The full source code is available on GitHub: Github.

1. Project Structure & Setup

Your project should be organized as follows:

exercise-2/

├── mcp_capabilities/

│ ├── server.py # Defines MCP capabilities

│ └── client.py # Demonstrates client usage

└── requirements.txt # fastmcp dependency

Instructions:

Ensure you have Python 3.12+ installed.

Install dependencies with:

pip install -r requirements.txtPlace your capability definitions (tools, resources, prompts) in server.py.

Use

client.pyto interact with your MCP server and test the capabilities.

2. Defining Capabilities in the Server

Open server.py and define your MCP capabilities as follows:

2.1. Tools

Tools are callable functions that perform actions.

For example, get_weather returns the weather for a given city (in Finland)

@mcp.tool(name="get_weather", description="Returns weather for a given city.")

def get_weather(city: str):

current_weather = {

"Helsinki": "The weather is sunny. The temperature is 27°C. No rain expected.",

"Tampere": "The weather is cloudy. The temperature is 25°C. Light rain expected.",

"Oulu": "The weather is rainy. The temperature is 22°C. Heavy rain expected.",

"Vaasa": "The weather is windy. The temperature is 24°C. No rain expected."

}

print(f"Fetching weather for {city}...")

return {"city": city, "weather": current_weather.get(city, "Unknown")}2.2 Resources

Resources are static or dynamic data collections.

Here, locations is a resource listing available cities.

@mcp.resource("resource://ai/locations")

def locations() -> List[str]:

return [

"Helsinki",

"Tampere",

"Oulu",

"Vaasa"

]2.3. Prompts

Prompts are templates for generating conversational context or instructions.

The get_weather_prompt provides a prompt for asking about the weather in a city.

@mcp.prompt("get_weather")

def get_weather_prompt(location: str):

return [

{"role": "system", "content": "You are a weather assistant"},

{"role": "user", "content": "Please give me the weather of the city {location}."}

]2.4. Running the Server

Start the server with:

python mcp_capabilities/server.pyYou should see a message indicating the server is running.

3. Using the Client

Open mcp_capabilities/client.py to interact with your MCP server. The client demonstrates how to:

Connect to the MCP server

Discover available tools, resources, and prompts

Fetch resources

Call tools

Generate prompt command to use with LLM

3.1. Creating and Connecting the MCP Client

transport = StreamableHttpTransport(url=SERVER_URL)

client = Client(transport)

async with client:

# ... code using the client ...This snippet creates a transport to the MCP server and initializes the client. The async with client block ensures the client connection is properly managed (opened and closed) for all subsequent operations.

3.2. Discovering Server Capabilities

print("🛠️ Available tools:")

pp.pprint(await client.list_tools())

print("\n📚 Available resources:")

pp.pprint(await client.list_resources())

print("\n💬 Available prompts:")

pp.pprint(await client.list_prompts())This section queries the server for its available tools, resources, and prompts, then prints them in a readable format. It helps the user discover what actions and data the server exposes.

3.3. Calling a Tool and Using a Prompt

weather_result = await client.call_tool("get_weather", {"city": "Helsinki"})

pp.pprint(weather_result)

prompt_result = await client.get_prompt("get_weather", {"location": "Tampere"})

pp.pprint(prompt_result)These lines demonstrate how to invoke a server-side tool (get_weather) with parameters and how to generate a prompt for an LLM using the server's prompt template. The results are printed for inspection.

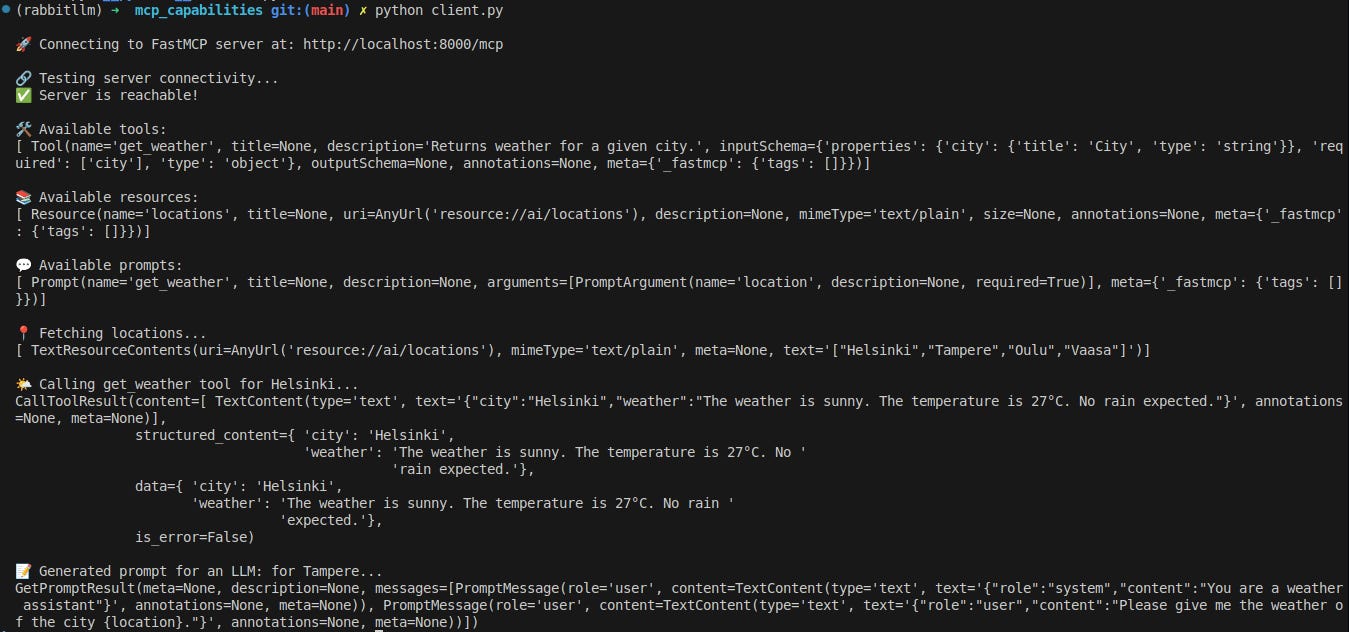

3.4. Running the Client to interact with the server

Start the client with:

python mcp_capabilities/client.pyWhen you run the client, you will see:

Server connectivity check

List of tools, resources, and prompts

Fetched locations

Weather information for a city

Generated prompt for a city

🔑 Key Takeaways

Tools are functions the AI can call to perform actions.

Resources are read-only data sources that inform the AI’s reasoning.

Prompts are behavioral templates that guide how the AI responds.

Each capability is modular, discoverable, and composable—just like Kubernetes objects. Mastering these concepts allows you to build AI systems that are maintainable, extensible, and production-ready.